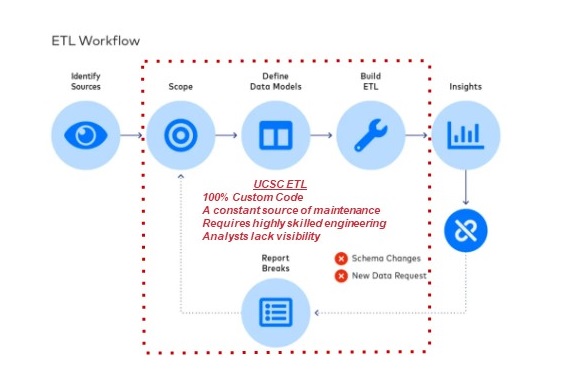

Traditional Approach to Data Integration

- The traditional approach to data integration, Extract-Transform-Load (ETL), dates from the 1970s and is so ubiquitous that “ETL” is often used interchangeably with data integration.

- When ETL was first devised in the 1970s, most organizations operated under very stringent technological constraints.

- Because transformations are dictated by the specific needs of analysts, every ETL pipeline is a complicated, custom-built solution.

- This ETL approach is the foundational mechanism for the legacy data warehouse environment, using a completely homegrown, customized code that requires constant maintenance from highly skilled engineers.

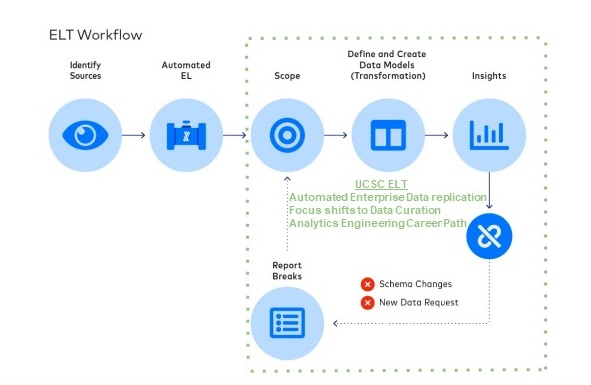

Modern Alternative to ETL

- The ability to store untransformed data in data warehouses enables a new data integration architecture, Extract-Load-Transform (ELT), in which data is immediately loaded to a destination upon extraction, and the transformation step is moved to the end of the workflow.

- Under ELT, extracting and loading data are independent of transformation by virtue of being upstream of it. Although the transformation layer may still fail as upstream schemas or downstream data models change, these failures will not prevent data from being loaded into a destination.

- This ELT approach is the foundational mechanism for the Common Data Platform, using industry standard and non-proprietary code that requires minimal maintenance from our data engineers – enabling a focus on data curation by analytics engineers

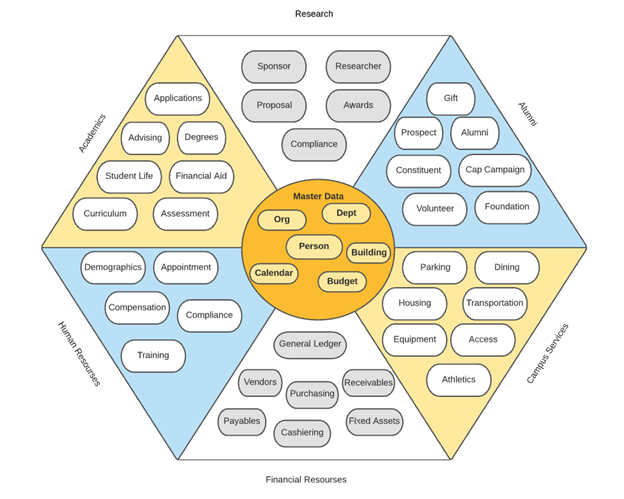

Enterprise Data Model

- The enterprise data model is a foundational component for the success of the Common Data Platform.

- The enterprise data model joins data from multiple domains into a single source of trusted facts, thus enabling the campus to become a more nimble, data-driven organization.